Why it issues: AMD is within the distinctive place of being the first competitor to Nvidia on GPUs – for each PCs and AI acceleration – and to Intel for CPUs – each for servers and PCs – so their efforts are getting extra consideration than they ever have. And with that, the official opening keynote for this yr’s Computex was delivered by AMD CEO Dr. Lisa Su.

Given AMD’s huge product portfolio, it isn’t shocking that Dr. Su’s keynote lined a broad vary of subjects – even a couple of unrelated to GenAI (!). The 2 key bulletins have been the brand new Zen 5 CPU structure (lined in full element right here) and a brand new NPU structure known as XDNA2.

What’s significantly fascinating about XDNA2 is its help for the Block Floating Level (Block FP16) knowledge sort, which gives the velocity of 8-bit integer efficiency and the accuracy of 16-bit floating level. In keeping with AMD, it is a new trade normal – that means current fashions can leverage it – and AMD’s implementation is the primary to be achieved in {hardware}.

The Computex present has an extended historical past of being a crucial launch level for conventional PC parts, and AMD began issues off with their next-generation desktop PC components – the Ryzen 9000 collection – which haven’t got a built-in NPU. What they do have, nevertheless, is the sort of efficiency that players, content material creators, and different PC system builders are always looking for for conventional PC purposes. Let’s not overlook how necessary that also is, even within the period of “AI PCs.”

In fact, AMD additionally had new choices within the AI PC house – a brand new collection of mobile-focused components for the brand new Copilot+ PCs that Microsoft and different companions introduced a couple of weeks in the past.

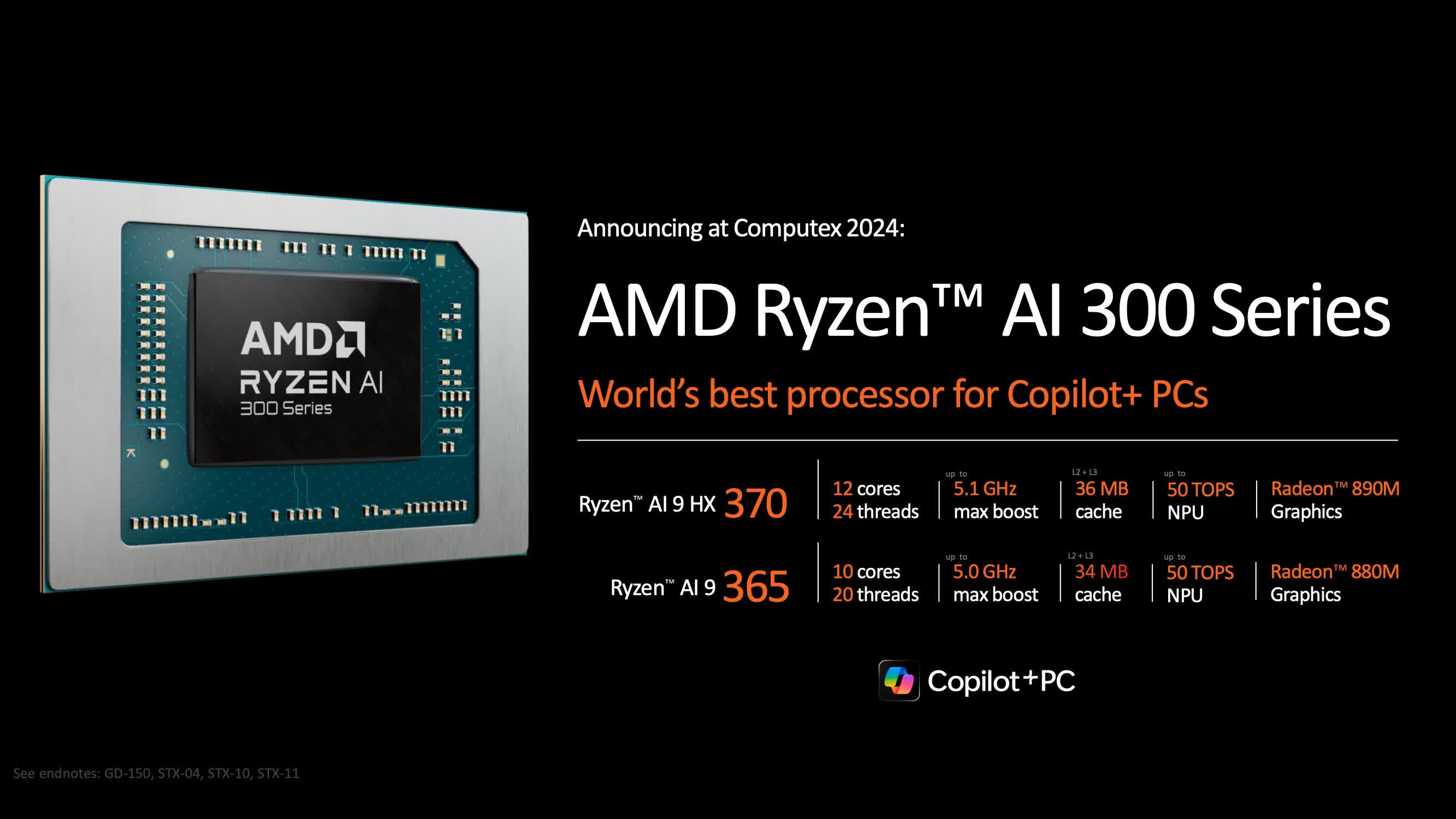

AMD is branding them because the Ryzen AI 300 collection to replicate the truth that they’re the third technology of laptop computer chips constructed by AMD with an built-in NPU. Slightly-known reality is that AMD’s Ryzen 7040 – introduced in January 2023 – was the primary chip with built-in AI acceleration, adopted by the Ryzen 8040 on the finish of final yr.

We knew this new chip was coming, however what was considerably surprising is how a lot new know-how AMD has built-in into the AI 300 (codenamed Strix Level). It options new Zen 5 CPU cores, an upgraded RDNA 3.5 GPU structure, and a brand new NPU constructed on XDNA2 that provides a formidable 50 TOPs of efficiency.

What’s additionally shocking is how shortly laptops with Ryzen AI 300s are coming to market.

What’s additionally shocking is how shortly laptops with Ryzen AI 300s are coming to market. Programs are anticipated as quickly as subsequent month, simply weeks after the primary Qualcomm Snapdragon X Copilot+ PCs are set to ship.

One huge problem, nevertheless, is that the x86 CPU and AMD-specific NPU variations of the Copilot+ software program will not be prepared when these AMD-powered PCs are launched. Apparently, Microsoft did not anticipate x86 distributors like AMD and Intel to be achieved so quickly and prioritized their work for the Arm Qualcomm units. Because of this, these might be Copilot+ “Prepared” techniques, that means they will want a software program improve – possible in early fall – to develop into full-blown next-gen AI PCs.

Nonetheless, this vastly sped-up timeframe – which Intel can be anticipated to announce for his or her new chips this week – has been spectacular to observe. Early within the growth of AI PCs, the widespread thought was that Qualcomm would have a 12 to 18-month lead over each AMD and Intel in growing an element that met Microsoft’s 40+ NPU TOPS efficiency specs.

The robust aggressive menace from Qualcomm, nevertheless, impressed the 2 PC semiconductor stalwarts to maneuver their schedules ahead, and it seems like they’ve succeeded.

On the datacenter aspect, AMD previewed their newest Fifth-gen Epyc CPUs (codename Turin) and their Intuition MI-300 collection GPU accelerators. As with the PC chips, AMD’s newest server CPUs are constructed round Zen 5 with aggressive efficiency enhancements for sure AI workloads which can be as much as 5x quicker than Intel equivalents, in accordance with AMD.

… as Nvidia did final evening, AMD additionally unveiled an annual cadence for enhancements for his or her GPU accelerator line and supplied particulars as much as 2026.

For GPU accelerators, AMD introduced the Intuition MI325, which gives twice the HBM3E reminiscence of any card available on the market. Extra importantly, as Nvidia did final evening, AMD additionally unveiled an annual cadence for enhancements for his or her GPU accelerator line and supplied particulars as much as 2026.

Subsequent yr’s MI350, which might be primarily based on a brand new CDNA4 GPU compute structure, will leverage each the elevated reminiscence capability and this new structure to ship a formidable 35x enchancment versus present playing cards. For perspective, AMD believes it’ll give them a efficiency lead over Nvidia’s newest technology merchandise.

AMD is without doubt one of the few corporations that is been capable of acquire any traction in opposition to AMD for large-scale acceleration, so any enhancements to this line of merchandise is sure to be well-received by anybody on the lookout for an Nvidia different – each giant cloud computing suppliers and enterprise knowledge facilities.

Taken as a complete, the AMD story continues to advance and impress. It is superb to see how far the corporate has come within the final 10 years, and it is clear they proceed to be a driving pressure within the computing and semiconductor world.

Bob O’Donnell is the president and chief analyst of TECHnalysis Analysis, LLC, a market analysis agency that gives strategic consulting and market analysis providers to the know-how trade {and professional} monetary group. You possibly can comply with Bob on Twitter @bobodtech