On Thursday, AMD introduced its new MI325X AI accelerator chip, which is about to roll out to information middle prospects within the fourth quarter of this yr. At an occasion hosted in San Francisco, the corporate claimed the brand new chip affords “industry-leading” efficiency in comparison with Nvidia’s present H200 GPUs, that are extensively utilized in information facilities to energy AI functions equivalent to ChatGPT.

With its new chip, AMD hopes to slender the efficiency hole with Nvidia within the AI processor market. The Santa Clara-based firm additionally revealed plans for its next-generation MI350 chip, which is positioned as a head-to-head competitor of Nvidia’s new Blackwell system, with an anticipated delivery date within the second half of 2025.

In an interview with the Monetary Occasions, AMD CEO Lisa Su expressed her ambition for AMD to grow to be the “end-to-end” AI chief over the subsequent decade. “That is the start, not the tip of the AI race,” she advised the publication.

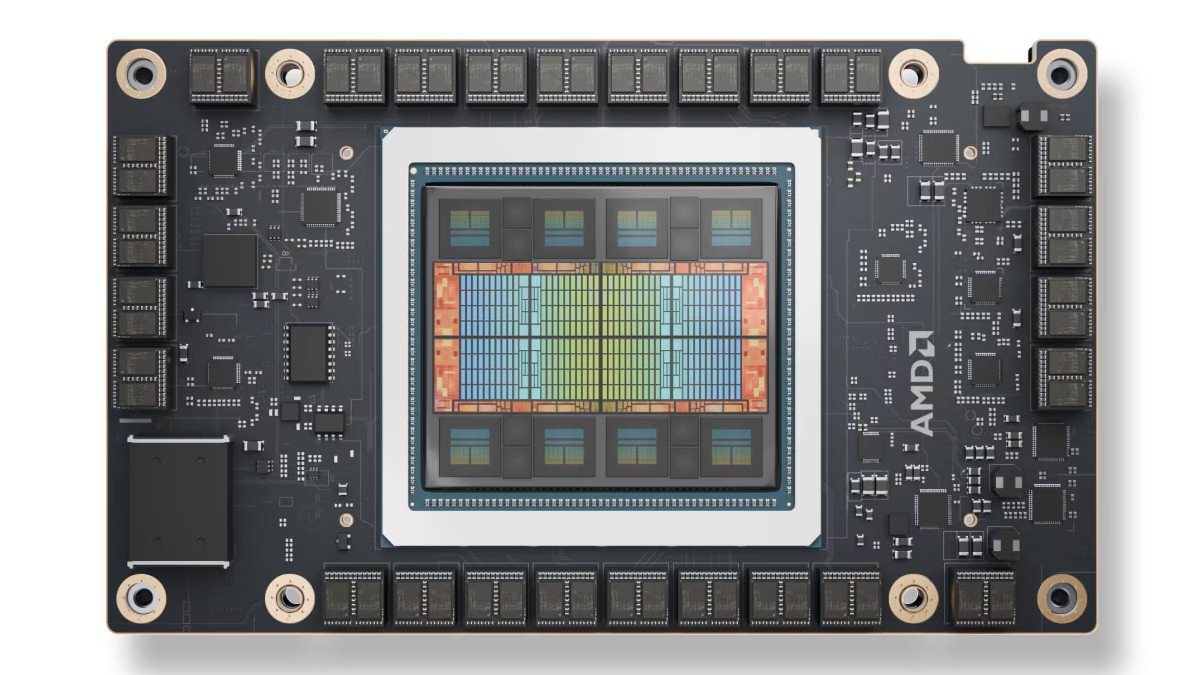

In keeping with AMD’s web site, the introduced MI325X accelerator incorporates 153 billion transistors and is constructed on the CDNA3 GPU structure utilizing TSMC’s 5 nm and 6 nm FinFET lithography processes. The chip consists of 19,456 stream processors and 1,216 matrix cores unfold throughout 304 compute models. With a peak engine clock of 2100 MHz, the MI325X delivers as much as 2.61 PFLOPs of peak eight-bit precision (FP8) efficiency. For half-precision (FP16) operations, it reaches 1.3 PFLOPs.